In what can only be described as a blatant attempt to control Adwords data, Google has started to delete Adwords API developer tokens en-masse based on thresholds and reasons seemingly only to them.

I received the following email this afternoon, which I initially thought was an error (emphasis mine):

As stated in the AdWords API Terms and Conditions (Section II.4), we periodically review AdWords API activity. We noticed that there has been low usage of the AdWords API developer token associated with your My Client Center (MCC) manager ID XXXXXXXXXX in the last 30 days. For the purpose of ensuring quality, improving Google products and services and compliance with AdWords API Terms and Conditions, we have disabled this token.

If you wish to re-apply for the token, please visit the AdWords API Center in your account. Remember to answer the following in detail if you re-apply for the token:

- Describe the uses of your API application or tool with specific examples. For instance, account management or bid optimization.

- Who is or will be using your API application or tool? For example, colleagues in your company or advertisers or agencies to whom you are selling the tool.

- Please attach screenshots of your API application or tool. If the application or tool is yet to be developed, please provide relevant design documentation.

- Please provide a list of clients that will be using your API application or tool in an automated way.

Please know that that we will take between 5 and 6 weeks to process all developer token re-applications.

Regards,

The AdWords API Team

TL;DR – We got screwed for ‘low volume’ usage, we can request re-inclusion but it will take 5-6 weeks.

It would be great to know what Google considers to be ‘low volume’. The developer token in question is used by our research tools (which matches the original application description) and makes under 500,000 API calls per month. Our usage is mostly all at once (pulling fresh data) plus ad-hoc usage throughout the month as new data is requested (sure, this is mostly KeywordEstimator queries). The thing is, there are people on the Adwords support forums who are making in excess of 8 million API calls per month and have also been disabled – so what exactly constitutes low volume, and how much data do we need to pull in order to get re-included?

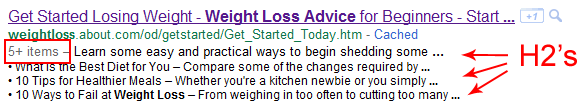

My theory is that these blanket rejections are actually nothing at all to do with volume of usage. Instead, I think they’re entirely to do with specific usage patterns only. Google doesn’t like 3rd party apps using their keyword volumes for automated keyword research as they see this as ‘gaming’ – instead they want to control the spread of this data through captcha-controlled properties of their own.

This is of course great news for tools like Wordtracker who, once they get their own access back (and they will), will likely clean up in the SEO market as everyone seeks an alternative method to accessing Adwords data outside of the Google API.

In a

In a